What is latency?

Latency is the time it takes for a data packet to pass from one point on a network to another. If, for example, Server 1 in London sends a data packet at 12:38:00.000 GMT to Server 2 in Chicago which receives the packet at 12:38:00.145 GMT. The amount of latency on this path is the difference between these two times: 0.145 seconds or 145 milliseconds. Most often, latency is measured between a user’s device and a data centre. Although data on the Internet can travel theoretically at the speed of light through fiber optic cables, it is not possible to completely avoid latency. Issues with latency can lead to web pages not loading, business processes being left incomplete and in the worst cases – loss of data and downtime.

What causes latency?

Latency can be caused by website construction or end-user issues but most of the time latency is caused by distance and networking routing.

Website Construction

A website can suffer latency if images are too large, or the content loaded from third parties makes it perform more slowly.

End-user issues

End-user issues can include a device being low on memory or CPU cycles can cause delays in loading.

Distance

The amount of time it takes for a request to reach a client device is referred to as Round Trip Time (RTT). While an increase of a few milliseconds might seem negligible, there are other considerations that can increase latency. There’s the to-and-fro communication necessary for the client and server to make that connection in the first place.

Network Routing

Problems with network hardware which the data passes through along the way.

Data travelling back and forth across the internet must cross multiple Internet Exchange Points (IXPs), where routers process and route the data packets, often having to break them up into smaller packets. All this additional activity adds a few milliseconds to RTT.

Physical issues

In a physical context, common network latency causes are the components that move data from one point to the next. Physical cabling such as routers, switches and Wi-Fi access points. In addition, latency can be influenced by other network devices like application load balancers, security devices, firewalls and Intrusion Prevention Systems (IPS).

If any (or all) of the above issues are affecting your business, you are suffering from high latency.

What are the costs of high latency?

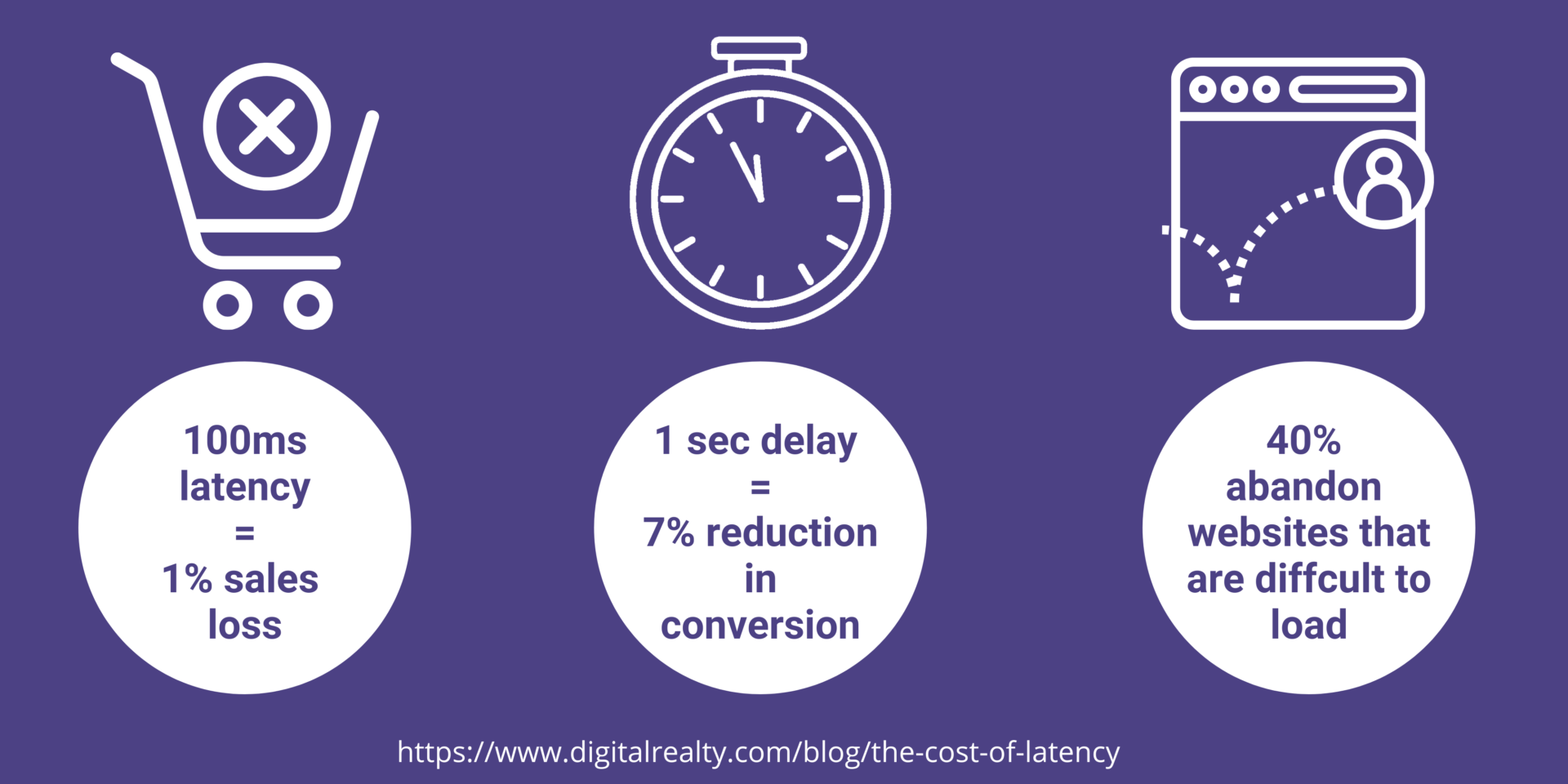

If you do not monitor and address the causes of high latency on your network, it can have an extremely negative impact on business profitability. Some of the consequences include:

Unreliable network performance.

Slower speeds are not the only consequence of high latency on your network. It also introduces inconsistency – speed fluctuations make it difficult to predict how your network will perform and don’t allow you to plan mitigation or control costs accordingly.

Impaired business operations leading to transaction losses.

As we have adapted our businesses to work in the cloud, we have also become accustomed to real-time business transactions and these have become central to business functionality. The consequences of high latency – such as slowness or failure to load, can be devastating to the productivity of a business. For example, if a customer cart takes too long to load you risk losing that transaction as the customers bounce off your website and move on to a competitor’s site.

Increased costs and reputational damage

Delays, lagging, and downtime are expensive – Gartner estimates the average cost of downtime for a business can be as much as USD$5,600 per minute. There is also the cost of reputational damage to be considered – the news is peppered with examples. A prominent bank experienced a two-day outage that caused its stock price to drop 2.3%.

So, what can be done to reduce latency?

The impacts of poor network speed and performance caused by latency can have a massive effect on businesses, making it a critical factor in your enterprise network. But reducing latency doesn’t have to be difficult. If you find you have high latency – it is important to take the following measures:

Measure packet delay

To improve network latency, a good starting point is having an accurate measure of how long a network communication takes from point of origin to fulfilment. Network managers have several tools to choose from to do this, including PING, Traceroute and My traceroute (MTR). A simple technique is to measure this using PING.

Avoid public internet

The issue with relying on public internet for connectivity; is the use of internet-based Virtual Private Networks. VPN tunnels may provide some protection for data in transit, but they still place users at the mercy of the public internet’s instability. We have all experienced slow and unreliable connectivity in times of peak demand or much more of an issue for businesses are outages when the connection goes down.

Ideally use a private network

Using a private network as the core of your access to connectivity, means that you can avoid the fluctuations of public internet, resulting in a far more stable and secure connection. A good private connectivity provider will also take care of mitigating against downtime and will reroute your data in case of outages. By architecting a private, scalable, end-to-end network with virtualised multi-cloud connectivity, you can positively impact your company’s bottom line with connectivity you can rely on under any workload.

If you need to keep some applications on public cloud you can protect your data from latency by using private interconnects

To optimise your connectivity, reducing its reliance on public internet transit, use private interconnects with the public cloud offerings you are using. If you are already using, or are looking to leverage, public cloud solutions from AWS or MS Azure, you can connect these clouds to your existing network using Interconnects. Direct Connect & Expressroute circuits are dedicated private links from your corporate WAN to your cloud environment edge, which will lower latency, enhance security and reduce the hops and jitter of public internet, making your network more reliable. Added benefits are that these solutions are highly scalable to support growing bandwidth requirements and include an SLA.

Virtualise your environment

To reduce the chance of latency and to create a stable network architecture – virtualisation brings a host of benefits. These also include removing the need for capital expenditure, speed of deployment, flexible upgrade options, scalability, resilience, and massively reduced recovery time following a problem.

Our Lifecycle Software case study illustrates how their latency issues were resolved by taking the steps outlined in this section. The first step was to migrate Lifecycle’s existing infrastructure to a Colo-environment within the amatis Reading data centre with the required connectivity. With a need to scale the platform and build in additional resilience, amatis architected a solution that leveraged Cisco UCS compute and application-aware storage to provide the required capacity and flexibility. The solution was virtualised across amatis’ two data centres to provide a highly resilient platform that was able to support an ‘always on’ environment.

“Prior to working with amatis we would have stability issues most months and were in danger of losing major customers; amatis transformed this and has provided us with a stable, scalable and secure platform that our customers can depend on.” Gael Martin, Technical Director, Lifecycle Software

By following these steps – measure and monitor packet delay, use a private network, use private interconnects where public internet use is unavoidable and virtualise your environment. If you are worried about latency and want to know more about how we can help you to reduce it contact the team at amatis.